In machine learning, prediction and inference are two different concepts. Prediction is the process of using a model to make a prediction about something that is yet to happen. The inference is the process of evaluating the relationship between the predictor and response variables. In this blog post, you will learn about the differences between prediction and inference with the help of examples. Before getting into the details related to inference & prediction, let’s quickly recall the machine learning basic concepts.

What is machine learning and how is it related with inference & prediction?

Machine learning is about learning an approximate function that can be used to predict the value of response or dependent variable. The approximate function is a function approximation of the exact function, the output of which will match with the real-world output. This approximate function is also termed a mathematical model or machine learning model. Given that this is an approximate function, there will always be some error which can be termed as prediction error. Some part of this error will be a reducible error (owing to the fact that models can be improved further), while others will be an irreducible error.

The machine learning model is built using one or more input variables which are also called predictors or independent variables. The output of this model is the response or dependent variable which we want to predict.

On one hand, the model is used to predict the value of the response variable based on the predictor variables, on the other hand, the model can also be used to understand the relationship (infer) between the response and the predictor variables.

Machine learning models are built to achieve the following objectives:

- Make predictions

- Analyze the inference

- Both of the above

For more details on understanding basic concepts of machine learning, check out my related post – What is Machine Learning? Concepts & Examples

What is prediction and inference and what’s the difference?

Prediction is about estimating the value of the response variable based on predictor or input variables. As discussed in the previous section, prediction can be termed as mapping a set of predictor values to their corresponding output.

Inference is about understanding the relationship between the response and the predictor variables. It is about understanding the following:

- Which predictors are associated with the response variable?

- How does the value of the response variable change in terms of magnitude with respect to change in the predictor variables?

- How does the value of the response variables change in terms of sign (positive or negative) with respect to change in the predictor variables?

- Could the relationship be described using a linear equation or is the relationship between predictor and response variable more complex?

Inference can be further classified as the following:

- Univariate Inference (Relationship between a single predictor and the response): This type of inference helps us understand how does the value of one predictor variable changes with respect to change in another predictor or set of predictors.

- Bivariate Inference (Relationship between response and two predictor variables): This type of inference helps in understanding how does the value of the response variable changes with respect to change in two predictor variables.

- Multivariate Inference (Relationship between response and more than two predictor variables): This type of inference helps in understanding how does the value of the response variable changes with respect to change in multiple predictors.

While prediction is used to estimate the value of the response variable, inference is used to understand the relationship between the predictors and the response variables.

Prediction & inference explained with examples

Here is an example of the prediction and need to build a machine learning model for prediction:

Consider a company that is interested in conducting a marketing campaign. The goal is to identify individuals who are likely to respond positively to an email marketing campaign, based on observations of demographic variables (predictor variables) measured on each individual. In this case, the response to the marketing campaign (either positive or negative) represents the response variable. The company is not interested in obtaining a deep understanding of the relationships between each individual predictor and the response; instead, the company simply wants to accurately predict the response using the predictors. This is an example of modeling for prediction.

Here is an example of inference and the need to build a machine learning model for inference:

Consider that the company is interested in understanding the impact of advertising promotions in different media types on the company sales. The following information is asked for:

- Which media are associated with sales?

- Which media generate the biggest boost in sales?

- How large of an increase in sales is associated with a given increase in radio vis-a-vis TV advertising?

The above example falls into the inference paradigm. You will build a model to understand the above relationship rather than predicting the sales.

Here is yet another inference example where it is desired to know as to what extent the product price is associated with the sales. The model would help in understanding the sales impact with variables such as product pricing, store location, discount levels, competition price, and so forth. In this situation, one might really be most interested in the association between each variable and the probability of purchase. This is an example of modeling for inference.

Apart from predictive and inference modeling, some modeling could be conducted both for prediction and inference. Let’s take the example of a restaurant to explain the concept. Suppose a restaurant owner wants to analyze the factors that influence customer satisfaction and loyalty towards the establishment. Some possible input variables that could be considered are the quality of food, ambiance, service, location, price, menu variety, and so on. In this case, the owner may want to understand the association between each individual input variable and customer satisfaction – for example, how much does the quality of food impact customer satisfaction? This is an inference issue. Alternatively, the owner may simply want to predict the overall satisfaction level of a customer based on their experience at the restaurant. This could help the owner to identify areas for improvement and take necessary actions to enhance customer experience. This is a prediction issue.

Use machine learning methods for prediction vs inference?

Knowing when to use a machine learning method for prediction or inference modeling depends on the objective of the analysis.

Prediction modeling aims to predict the outcome of a given input variable or set of variables. In other words, it seeks to forecast the future based on the past. For instance, predicting stock prices or customer churn rate in a business. In this case, a predictive modeling approach that focuses on identifying patterns and trends in historical data would be suitable. Some common machine learning methods used for prediction modeling include linear regression, decision trees, neural networks, and support vector machines.

On the other hand, inference modeling aims to establish the relationship between two or more variables. The goal is to understand the causal relationships between the input and output variables. For example, establishing a connection between a specific factor and a disease. In this case, an inference modeling approach that focuses on discovering relationships and patterns in the data would be appropriate. Some common machine learning methods used for inference modeling include logistic regression, linear discriminant analysis, and Bayesian networks.

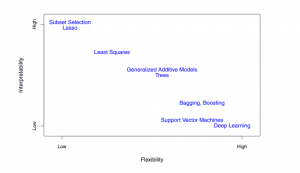

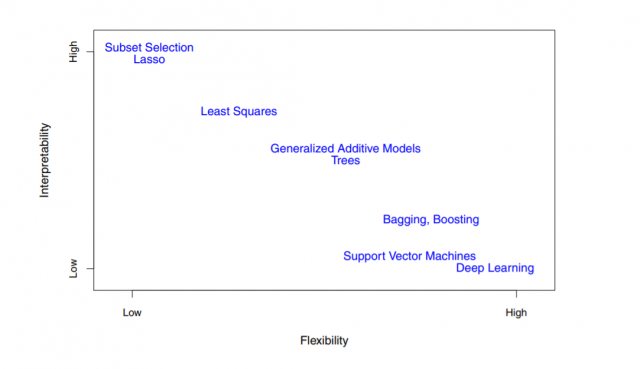

Here is a diagram that represents different machine learning algorithms (linear and non-linear) vs the need for interpretability vs flexibility. In the case of high flexibility, the goal is prediction but the interpretability becomes low. In the case of high interpretability, the goal is inference. However, the model performance accuracy suffers.This diagram is taken from the book, Introduction to statistical learning

Machine learning prediction and inference are two different aspects of machine learning. Prediction is the ability to accurately predict a response variable while inference deals with understanding the relationship between predictor variables and response variables. The difference in prediction vs inference models can be seen in examples such as predicting marketing campaign success or understanding how media influences sales on promotions, etc. You may use linear regression for an inference model while non-linear methods work best when prediction is your objective.

- The Watermelon Effect: When Green Metrics Lie - January 25, 2026

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

Greate,thank you very much, I benefited a lot from this training

Could you please find and introduce an AI model which commonly used in machine vision for inference, can be the case like inspecting the packing of a cookie box made of paper

This post was great and comprehensive. Thanks a lot.