In this post, you will learn about a solution approach for searching similar images out of numerous images matching an input image (query) using machine learning / deep learning technology. This is also called a reverse image search. The image search is generally searching for images based on keywords.

Here are the key components of the solution for reverse image search:

- A database of storing images with associated numerical vector also called embeddings.

- A deep learning model based on convolutional neural network (CNN) for creating numerical feature vectors (aka embeddings) for images

- A module which searches embeddings of an input image (query) from the image database based on the nearest neighbor search algorithm

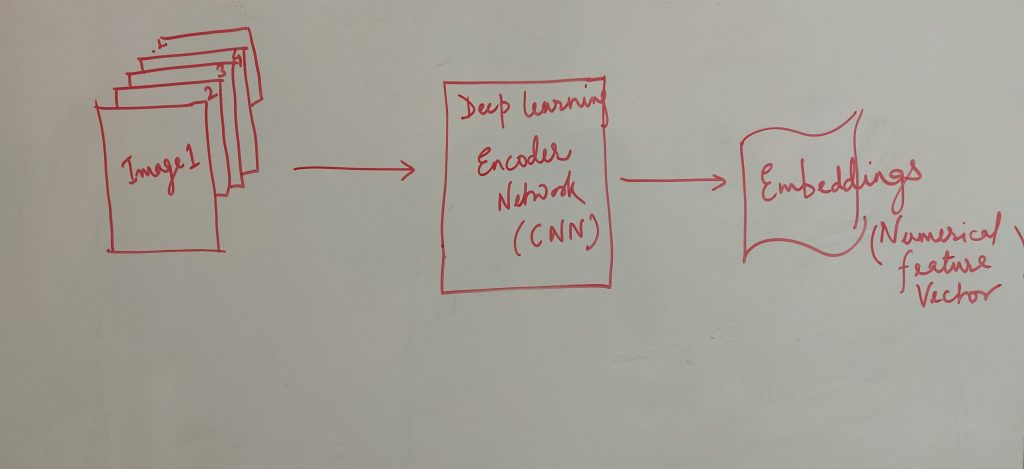

Deep Learning Model (CNN) for Creating Embeddings

First and foremost, it would be required to create embeddings (numerical feature vector) representing the image. This would be used by the convolutional neural network (Autoencoder) with decreasing layer size. The last layer of the encoder network (CNN) would be used to create embeddings (a lower-dimensional representation of the input image). The diagram below represents the same:

Figure 1. Create image embeddings using Autoencoder Network (CNN)

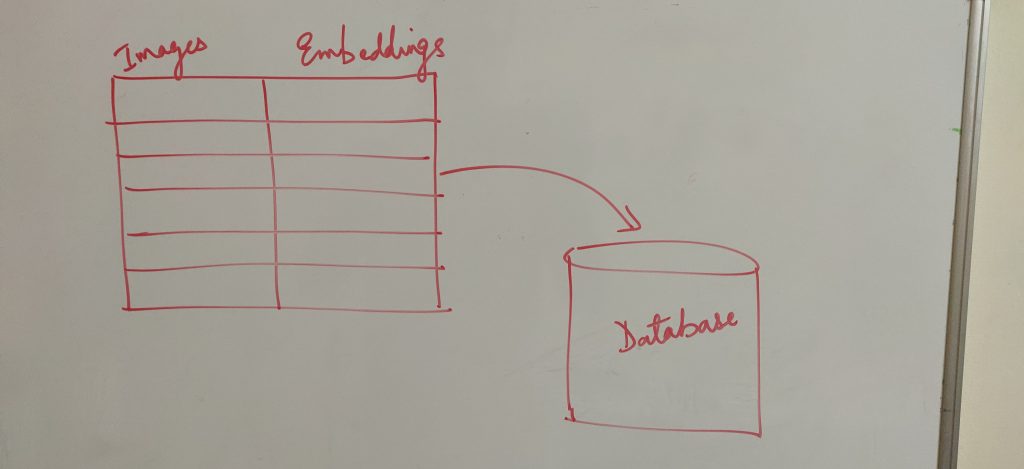

Database for Storing Embeddings and Image

Once the embedding is created using the autoencoder network (CNN), these embeddings would need to be saved in a database. The database would store the image and the associated embeddings. In order to create a comprehensive search, one would need to gather millions of images and create their embeddings for storing them in the database. The following diagram represents the same:

Figure 2. Embeddings Database

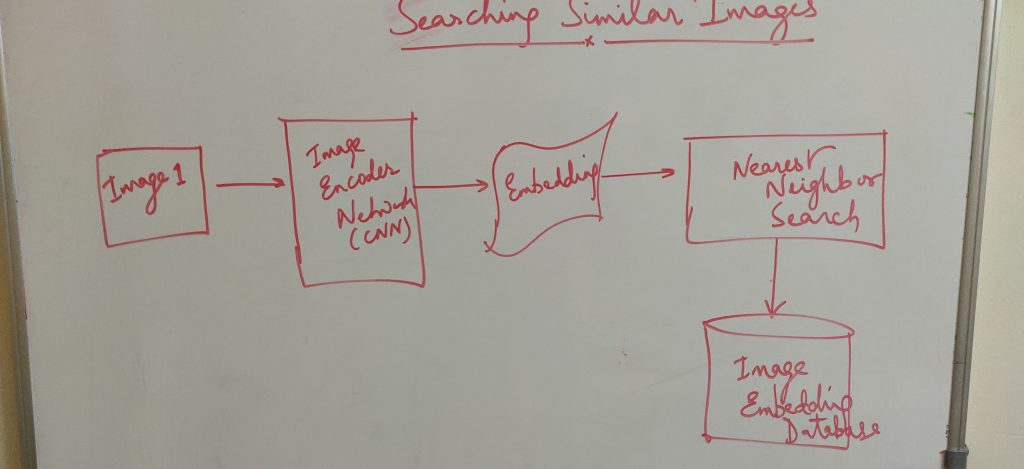

Nearest Neighbor Search for Image Search

Once the database is created, the next step is searching one or more images from the database matching an input image.

Figure 3. Reverse image search using Autoencoder network (CNN), Nearest neighbor algorithm and Embedding DB

In order to achieve this, the following would be followed:

- For the given input image (query), the embedding is created using the auto-encoder network.

- The embedding is then searched from the image-embeddings database using the nearest neighbor algorithm.

Real-world applications of Image Search

There are various different real-world use cases where image search could prove to be very useful. One of them is in the field of digital pathology. Google recently published a project called as SMILY which finds the similar patches of images for a given image patch from the diagnostic report. SMILY stands for “Similar Matching Images like Yours”. One another application could be palmistry where one would want to predict the life of a person based on the palm image.

Summary

In case you are planning to build a reverse image search solution, you would want to consider the three key architecture components mentioned in this article: A. Creating a deep learning CNN model (autoencoder) for creating embeddings (numerical feature vector) representing images B. Creating a database of images and associated embeddings C. Creating a nearest-neighbor based computational module for performing the search

- Coefficient of Variation in Regression Modelling: Example - November 9, 2025

- Chunking Strategies for RAG with Examples - November 2, 2025

- RAG Pipeline: 6 Steps for Creating Naive RAG App - November 1, 2025

I found it very helpful. However the differences are not too understandable for me